Hands On

Curated List of Data Engineering Project

- What We Want To Do

- Setup Local Environment

- Big Data Framework

- Kappa Data Pipeline aka Realtime using AWS

- Data Modeling and Analytic Engineering

- Data pipeline with Open Source Mage AI and Clickhouse

- AWS Ingestion Pipeline

- Azure Data Pipeline in 1 hour

- Design ETL Pipeline for Interview Assessment

- How to do everything

What We Want To Do

- Data pipeline

- Analysis Video Streaming pipeline

- Practice coding and big data framework

- Hands on with Cloud Provider

- Fast to setup and learn to adapt with project

- Wrap up knowledge and starting a new project

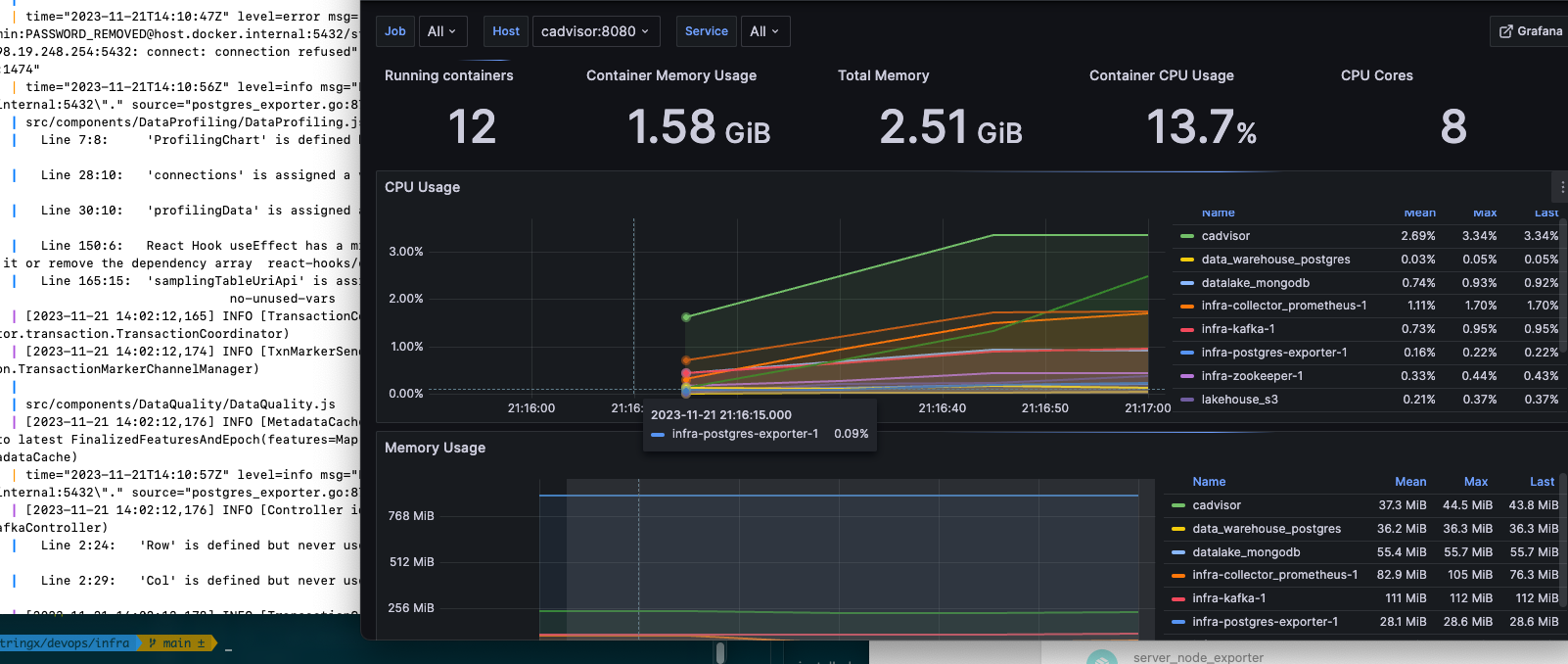

Setup Local Environment

As Data Engineer and Developer, we’ve spend hours and hours to configure terminal, services for different developments tool.

Let’s check out this repo Setup Data Dev Env for local environment setup.

This video will show how I hack my Macbook M1 configured for data engineering project. 11 Containers on 8Gb RAM, base version of Macbook M1

It would be great if we have a strong machine for practicing project as long as data and project has being scale bigger, but with the maximalism perspective → lets try with what you have on hands.

Before starting the data engineering practices, you’ll need to review the knowledge of fundamental of data engineering, check the Section 1 and Section 2 for scanning what knowledge you are missing

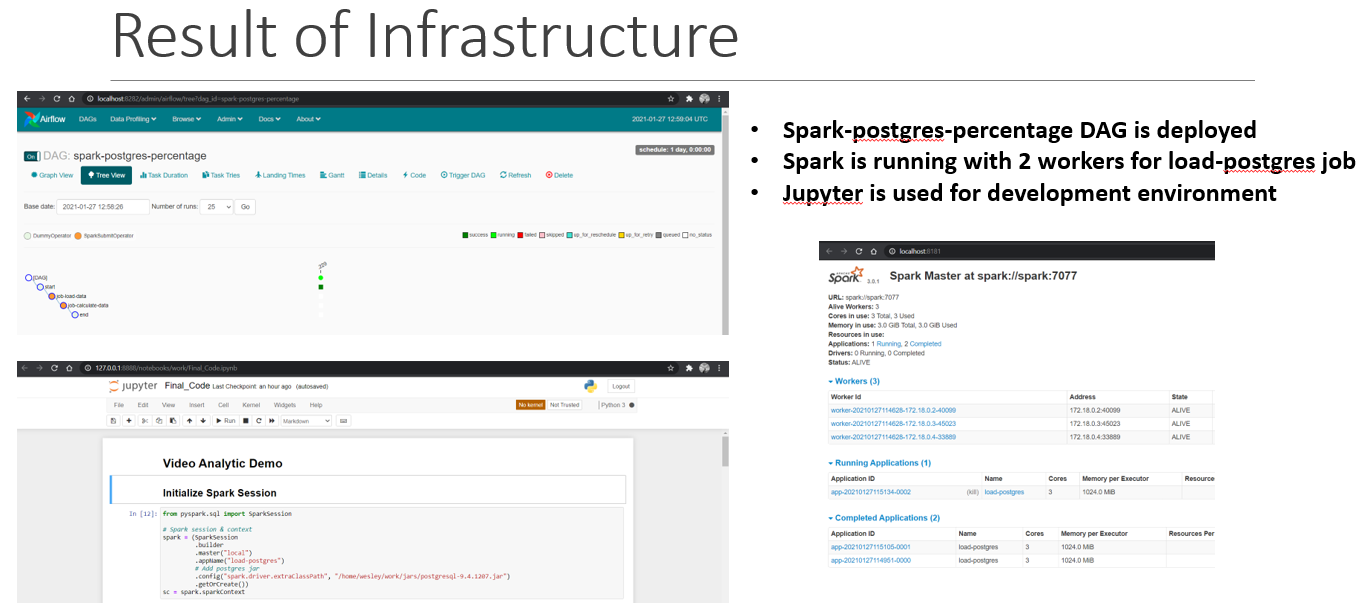

Big Data Framework

-

Target: Have familiar with Big Data Framework, process data with Spark, set up basic components of data pipeline using Docker containers

-

Stack: Apache Spark, Apache Airflow, Python

-

Implementation: Checkout my repo Streaming data pipeline

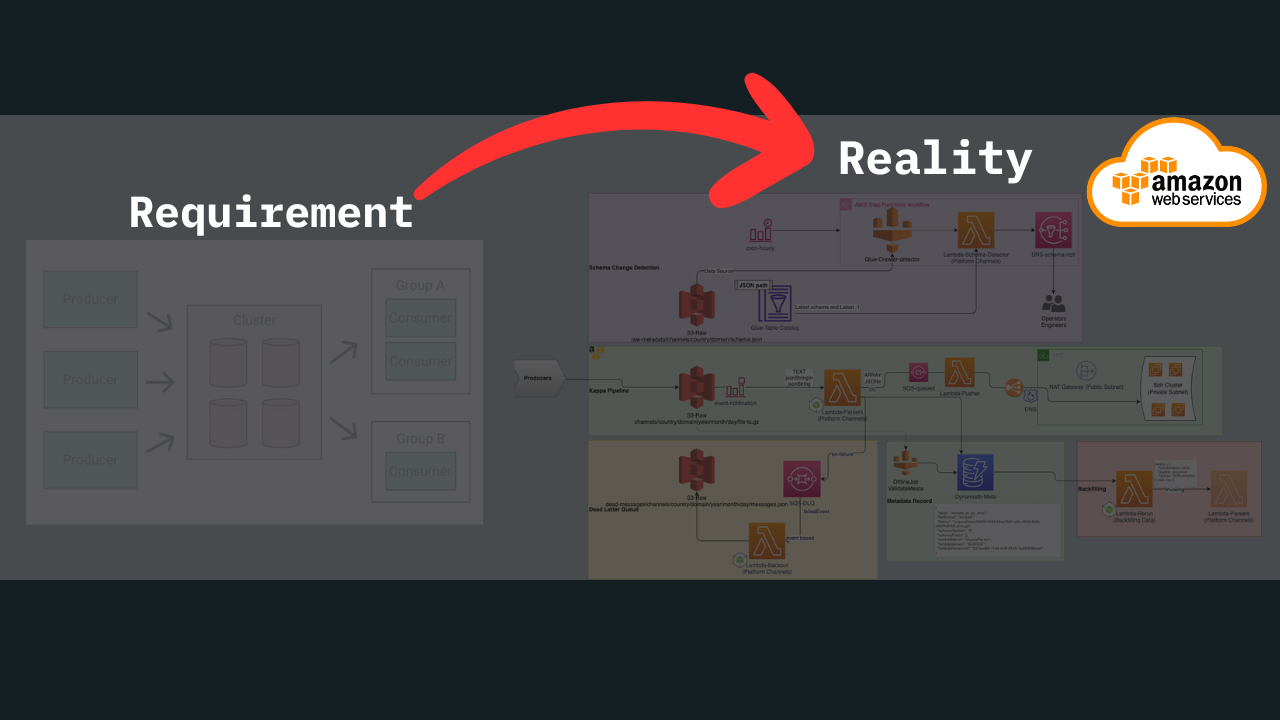

Kappa Data Pipeline aka Realtime using AWS

-

Target: In order to start the data pipeline on AWS, if you wanted to start the freelance project and help your client to get started with modern data pipeline

-

Stack: AWS Data stack (Lambda Function, Step Function, Glue), Sorl Cluster, etc.

-

Implementation: check out this blog post Built Real-time data pipeline on AWS and youtube video for this Built Real-time data pipeline on AWS

-

Example: Private Content

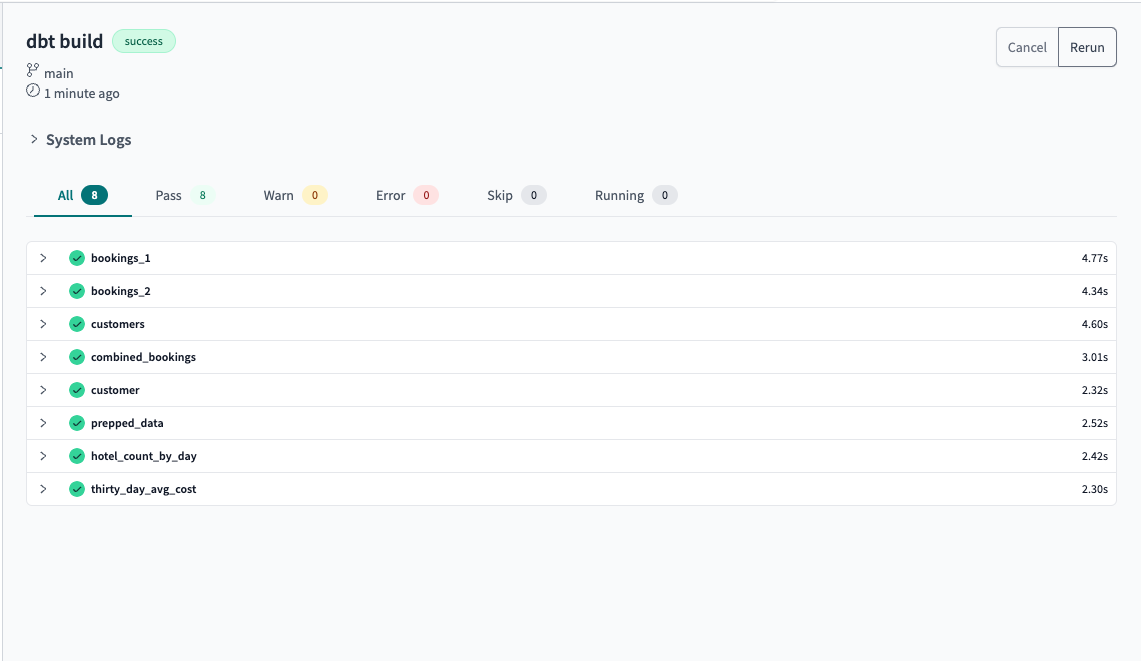

Data Modeling and Analytic Engineering

-

Target:

- Transforming the data loaded in DWH to Analytical Views

- Understand 4 aspects of the analysis

-

Stack: Airflow, dbt Cloud, Snowflake

-

Implementation: building data model, pipeline, quality, dashboard for booking. Check this repo Booking Project

Data pipeline with Open Source Mage AI and Clickhouse

You will have better learning and fun with OSS, purchase them instead of Snowflake and Databricks with extremely high of expenses.

Motivation of using Open Source: - Saving your money by using these Open Source Software OSS: - Having small business that processing data with streaming and batching,… - Practice data engineering skills: create pipeline, orchestration, warehouse with micro partitioning

-

Stack: Mage and Clickhouse and Docker

-

Implementation: watch this video Quick Setup Full Data Pipeline with Open Source Mage AI and Clickhouse

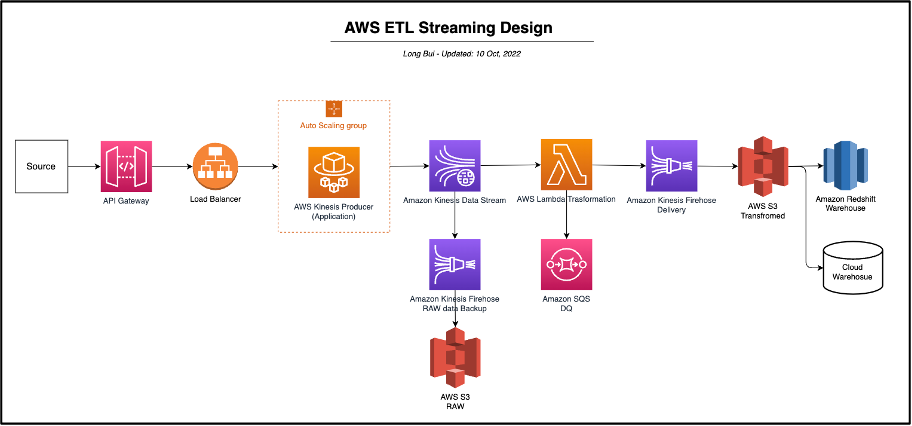

AWS Ingestion Pipeline

-

Target: Designing and developing data pipeline architecture especially using AWS Data services. This is a typical data pipeline using AWS Data services.

-

Stack: AWS Clouds, AWS Data Services

-

Implementation: Checkout this blog post AWS Data Pipeline

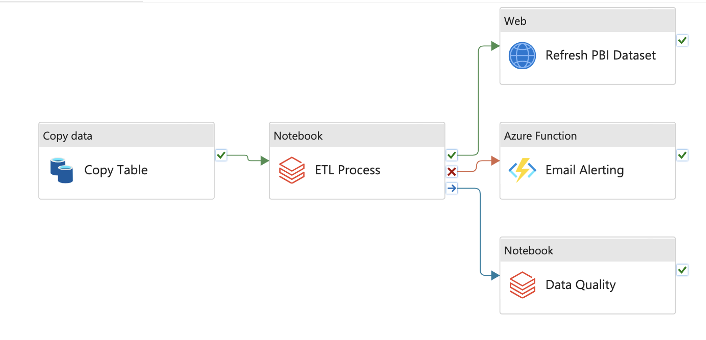

Azure Data Pipeline in 1 hour

-

Target: In order to resolve the problems what we need to do is to understand the current data pipeline concepts and identify the area where we are working on the possible improvement and adopt the changes.

-

Stack: Azure Data Services, Power BI

-

Implementation: Checkout this blog post Azure Data Pipeline

Start from basic and understand the fundamental knowledge of what services/tools your're using.

This way helps you understand and more easier to switch to other services.

Or you can check the Recent Note section to getting the list of projects, thoughts.

Design ETL Pipeline for Interview Assessment

- Check out this Free Documentation: drive docs

- If you want to get the Word version with editable format, you can check the sponsor & store

How to do everything

Keep the rules:

- Start from small

- Keep discipline

- Consistent

- Never end