Basic Skills

- Data Engineering Project Setup

- Learn How To Code

- Getting Familiar with Git

- Programming

- Data Structures and Algorithms

- Operating Systems

- Computer Networks

- Software Testing and Data Testing

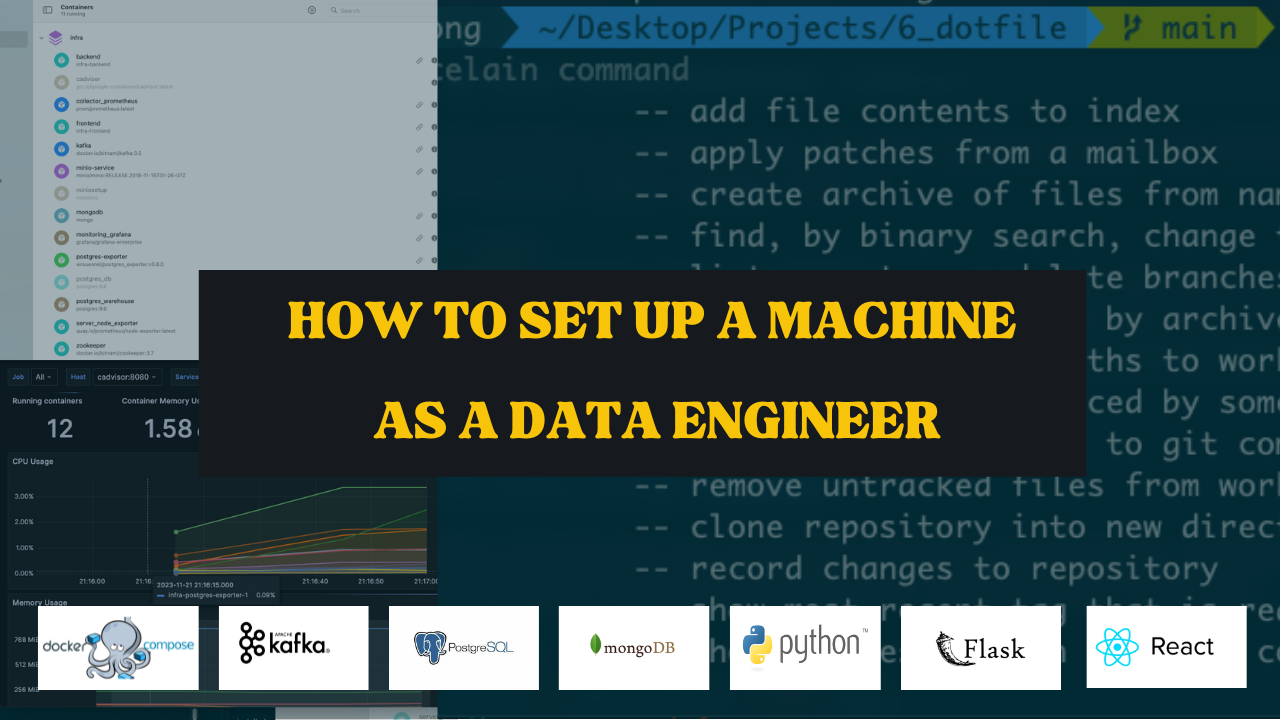

Data Engineering Project Setup

Known as Data Tech-Stack for building OpenData platforms and applications.

Try to use this setup for your projects, doing custom configuration as your application requires. But there are a few of commons configuration for software development, especially data development.

Check out this repo dotfile

How to porting the data pipeline?

Today, many traditional businesses have been investing more in digitalizing their operations and are gradually moving towards workflow automation. With a foundation and training as an automation engineer, I completely understand and agree with why there is this promising transformation, which promises to bring about changes similar to the transition from Industry 1.0 to 2.0 and to 3.0.

Regarding the rapid and easy deployment of data pipeline projects with open sources, instead of spending hundreds or thousands of dollars on cloud platform services, I prioritize criteria like: free-first, local-first.

Some SaaS tools to understand how to set up data pipelines comprehensively and effectively, tools that I have tried and recommend:

- mage ai

- sqlmesh

- metabase

If you, like me, are a data engineer or want to become an engineer and want to understand how data pipelines operate, try the tools I’ve listed. Everything is free except for the early stages or knowledge jumps where paid services might be needed.

Many career switchers are still struggling to understand and don’t know what the actual work entails, wondering if it’s easy to build and if it’s accessible for beginners.

I’ve shared this for a long time, but many still don’t know where to start, so try these 3 tools. Take it Simple.

Learn How To Code

Why this is important: Without coding you cannot do much in data engineering. As engineer and developer, you are careless about what programming languages are. Focus on solving the problem.

The possibilities are endless:

- Writing or quickly getting some data out of a SQL DB

- Getting free data from different databases, extracting value information

- Testing to produce messages to a Kafka topic

- Reading data from Topic and storing it into MongoDB

- Understanding the source code of a Python Application, or Java WebService

- Reading counter statistics out of a HBase key value store

- Organize all data flow using Prefect or Dagger

- Try out a SaaS - Airbyte

So, which language do I recommend then?

I highly recommend at least Python because too many reason are there.

But Java is the one I highly recommended. It’s everywhere and it has community supports.

When you are getting into data processing with Spark you should use Scala. But, after learning Java this is easy to do.

In late 2023 and 2024, there are many changes regarding the development of data and infrastructure and all the backend stuff related to web3 and blockchain. Golang would be great for programming I highly recommended to check it to move into if you are looking for a new language.

Also Scala is a great choice. It is super versatile. (Personally I know many data players are more familiar with Python, so PySpark is a good choice though)

Download the notebook of OOP and SOLID principles before read the following explanation.

- OOP Object oriented programming

You should know this basic

%%{init: {

'theme': 'base',

'themeVariables': {

'primaryColor': '#ffffff',

'primaryTextColor': '#000000',

'primaryBorderColor': '#666666',

'lineColor': '#666666',

'secondaryColor': '#ffffff',

'tertiaryColor': '#ffffff'

}

}}%%

classDiagram

direction TB

class Animal {

+String name

+int age

+speak()

}

class Dog {

+String breed

+bark()

}

class Cat {

+String color

+meow()

}

Animal <|-- Dog

Animal <|-- Cat

Animal : +speak()

Dog : +bark()

Cat : +meow()

Dog : +String breed

Cat : +String color

where,

- Animal Class: Represents a general class with attributes

nameandage, and a methodspeak(). - Dog Class: Inherits from

Animaland adds a new attributebreedand a methodbark(). - Cat Class: Also inherits from

Animaland adds a new attributecolorand a methodmeow().

Core Concepts Illustrated:

- Classes and Objects:

Animal,Dog, andCatare classes.DogandCatare specific implementations ofAnimal. - Inheritance:

DogandCatinherit fromAnimal, gaining its attributes and methods. - Encapsulation: Each class encapsulates its attributes and methods.

- Polymorphism: Methods like

speak()can be used interchangeably for different animal types through their commonAnimalinterface.

# Class definition

class Animal:

def __init__(self, name):

self.name = name

def speak(self):

raise NotImplementedError("Subclass must implement abstract method")

# Subclass definition

class Dog(Animal):

def speak(self):

return "Woof!"

# Another subclass definition

class Cat(Animal):

def speak(self):

return "Meow!"

# Creating objects

dog = Dog("Buddy")

cat = Cat("Whiskers")

# Using objects

print(dog.name) # Output: Buddy

print(dog.speak()) # Output: Woof!

print(cat.name) # Output: Whiskers

print(cat.speak()) # Output: Meow!

OOP promotes structured code by organizing it into classes and objects, fostering code reuse through inheritance, encapsulating data to protect it from unintended modifications, and leveraging polymorphism to handle different types of objects through a common interface. This results in more modular, maintainable, and scalable software systems.

SOLID Principles

SOLID is an acronym representing five design principles aimed at creating more understandable, flexible, and maintainable software. These principles are fundamental to object-oriented design and programming. Here’s a detailed explanation of each:

Single Responsibility Principle (SRP)

- Definition: A class should have only one reason to change, meaning it should have only one responsibility or job.

- Purpose: To ensure that a class is focused on a single task, making it easier to understand, test, and maintain. If a class has multiple responsibilities, changes to one responsibility might affect others, leading to a tightly coupled codebase.

- Example: If you have a

Reportclass that handles both generating and printing reports, it violates SRP. Instead, split it into two classes:ReportGenerator(responsible for generating reports) andReportPrinter(responsible for printing reports).

# Violates SRP

class Report:

def generate(self):

# Generate report logic

pass

def print(self):

# Print report logic

pass

# Follows SRP

class ReportGenerator:

def generate(self):

# Generate report logic

pass

class ReportPrinter:

def print(self, report):

# Print report logic

pass

Open/Closed Principle (OCP)

- Definition: Software entities (classes, modules, functions, etc.) should be open for extension but closed for modification.

- Purpose: To allow new functionality to be added without altering existing code, which helps in preventing bugs and maintaining existing functionality. This is typically achieved through abstraction and polymorphism.

- Example: Suppose you have a

Shapeclass with a methoddraw(). Instead of modifying this class every time a new shape is added, you can use inheritance and polymorphism. Create a base classShapeand extend it with subclasses likeCircle,Rectangle, etc., each implementing thedraw()method.

# Follows OCP

class Shape:

def draw(self):

pass

class Circle(Shape):

def draw(self):

# Draw circle logic

pass

class Rectangle(Shape):

def draw(self):

# Draw rectangle logic

pass

def draw_shapes(shapes):

for shape in shapes:

shape.draw()

Liskov’s Substitution Principle (LSP)

- Definition: Objects of a superclass should be replaceable with objects of a subclass without affecting the correctness of the program.

- Purpose: To ensure that a subclass can stand in for its superclass without altering the desirable properties of the program. This means that derived classes must be substitutable for their base classes.

- Example: If you have a

Birdclass with a methodfly(), and you create aPenguinsubclass, thePenguinshould not be able to overridefly()in such a way that violates the expected behavior ofBird.

# Violates LSP

class Bird:

def fly(self):

pass

class Penguin(Bird):

def fly(self):

# Penguins can't fly

raise Exception("Penguins can't fly!")

# Follows LSP

class Bird:

def move(self):

pass

class Sparrow(Bird):

def move(self):

# Sparrow flies

pass

class Penguin(Bird):

def move(self):

# Penguin walks

pass

Interface Segregation Principle (ISP)

- Definition: A client should not be forced to depend on interfaces it does not use. Instead of one large interface, many small, specific interfaces are preferred.

- Purpose: To avoid creating a large, cumbersome interface with methods that are irrelevant to some implementing classes. This principle helps in keeping the system modular and easier to maintain.

- Example: If you have an interface

Machinewith methodsprint(),scan(), andfax(), and you have aPrinterclass that only implementsprint(), it should not be forced to implementscan()andfax(). Instead, separate interfaces for each capability should be created.

# Violates ISP

class Machine:

def print(self):

pass

def scan(self):

pass

def fax(self):

pass

# Follows ISP

class Printer:

def print(self):

# Print logic

pass

class Scanner:

def scan(self):

# Scan logic

pass

class FaxMachine:

def fax(self):

# Fax logic

pass

Dependency Inversion Principle (DIP)

- Definition: High-level modules should not depend on low-level modules. Both should depend on abstractions. Additionally, abstractions should not depend on details; details should depend on abstractions.

- Purpose: To reduce the coupling between high-level and low-level components by introducing abstractions. This principle helps in making the system more flexible and easier to modify.

- Example: Instead of having a

UserServiceclass directly depend on aMySQLDatabaseclass, it should depend on aDatabaseinterface. This allows you to change the database implementation without affecting theUserService.

# Violates DIP

class MySQLDatabase:

def query(self):

pass

class UserService:

def __init__(self):

self.database = MySQLDatabase()

# Follows DIP

class Database:

def query(self):

pass

class MySQLDatabase(Database):

def query(self):

pass

class UserService:

def __init__(self, database: Database):

self.database = database

Finally

- Single Responsibility Principle (SRP): Ensure each class has one responsibility.

- Open/Closed Principle (OCP): Design classes to be open for extension but closed for modification.

- Liskov’s Substitution Principle (LSP): Subtypes must be substitutable for their base types without altering the correctness of the program.

- Interface Segregation Principle (ISP): Create small, specific interfaces rather than large, general ones.

- Dependency Inversion Principle (DIP): Depend on abstractions, not on concrete implementations.

Applying these SOLID principles helps in creating robust, maintainable, and scalable software systems.

- What are Unit tests to make sure what you code is working

- Functional Programming

- How to use build management tools like Maven

- Load Test, Performance Test

Understand the Data Object Modeling

%%{init: {

'theme': 'base',

'themeVariables': {

'primaryColor': '#ffffff',

'primaryTextColor': '#000000',

'primaryBorderColor': '#666666',

'lineColor': '#666666',

'secondaryColor': '#ffffff',

'tertiaryColor': '#ffffff'

}

}}%%

classDiagram

class Scraper {

+startScraping()

+getCategories(): List<Category>

+getSubCategories(category: Category): List<SubCategory>

+getPages(subCategory: SubCategory): List<Page>

+getProductDetails(page: Page): List<ProductDetail>

+storeData(productDetails: List<ProductDetail>)

}

class Category {

+id: int

+name: String

+url: String

+getSubCategories(): List<SubCategory>

}

class SubCategory {

+id: int

+name: String

+url: String

+category: Category

+getPages(): List<Page>

}

class Page {

+id: int

+url: String

+subCategory: SubCategory

+getProductDetails(): List<ProductDetail>

}

class ProductDetail {

+id: int

+name: String

+price: float

+description: String

+url: String

+page: Page

}

class Database {

+connect()

+storeProducts(products: List<Product>)

+storeProductDetails(productDetails: List<ProductDetail>)

}

class Product {

+id: int

+name: String

+category: String

+subCategory: String

+price: float

}

Scraper --> Category : "scrapes"

Category --> SubCategory : "has"

SubCategory --> Page : "has"

Page --> ProductDetail : "has"

Scraper --> Database : "stores data in"

Database --> Product : "stores"

Database --> ProductDetail : "stores"

ProductDetail --> Page : "belongs to"

Page --> SubCategory : "belongs to"

SubCategory --> Category : "belongs to"

Figure: Design of Website Ingestion ERD

Getting Familiar with Git

Understanding Git’s Importance: Tracking changes in coding and managing multiple program versions can be a daunting task. It becomes increasingly challenging without a structured system in place for collaboration and documentation.

Imagine working on a Spark application and your colleagues need to make alterations while you’re away. Without proper code management, they face a dilemma: Where is the code located? What were the recent changes? Where’s the documentation? How do we track modifications?

However, by hosting your code on platforms like GitHub, your colleagues gain easy access. They can comprehend it via your documentation (don’t forget in-line comments).

Developers can pull your code, create a new branch, and implement changes. Post your absence, you can review their alterations and merge them seamlessly, ensuring a unified application.

Where to Learn: Explore GitHub Guides for comprehensive basics: GitHub Guides

Refer to this cheat sheet for essential GitHub commands that can be a lifesaver: Atlassian Git Cheat Sheet

Additionally, delve into these concepts:

- Add and commit

- Branching

- Forking

- Pull vs Fetch

- Push

- Configuration

GitHub employs markdown for page writing, a straightforward yet enjoyable language. Familiarize yourself with it using this markdown cheat sheet: Markdown Cheat Sheet

For text file conversions to and from markdown.

Please use combination of markdown and yaml

Note: Please use .gitignore and set up git config before you’re starting develop anything

Example of Git flow:

%%{init: {

'theme': 'base',

'themeVariables': {

'primaryColor': '#ffffff',

'primaryTextColor': '#000000',

'primaryBorderColor': '#666666',

'lineColor': '#666666',

'secondaryColor': '#ffffff',

'tertiaryColor': '#ffffff'

}

}}%%

gitGraph

commit

commit

branch develop

checkout develop

commit

commit

checkout main

merge develop

commit

commit

Figure: Git Workflow

Programming

Watch this video as I highly recommend these languages for data engineers, 100% surely you will be better engineering Learning this for data engineers | SQL, Python, NodeJs, CLI, Golang

Recommend, fast moving for Data Engineer by learning Python and API together.

@server_bp.route('/insert_quality_rules', methods=['POST'])

def dcs_insert_quality_rules():

try:

table_name = request.get_json().get('tableName')

column_name = request.get_json().get('columnName')

connection_name = request.get_json().get('dbType')

logger.debug(f"Selected Table {table_name}, column_name={column_name}")

db_handler = ServerHandler(connection_name)

insert_quality_rule_query = insert_quality_rules(connection_name, table_name, column_name)

rules_structure = db_handler.exec_sql_query(insert_quality_rule_query)

return jsonify(rules_structure)

except Exception as e:

return jsonify({'error': str(e)}), 50

Proficiency in languages like Python, Java, SQL, R, or others relevant to your domain. Additionally, familiarity with scripting languages (e.g., Bash, PowerShell) can be beneficial.

As Data Engineer, I often have been requested to work with NodeJS for Backend processing such as API, Backend Database models, as well as Scala for streaming processing.

I really enjoy programming languages and with AI Generation that I can write any code with that helps very efficiency. Don’t be limited by languages, but for data processing purpose you should try with Python for 100% percents.

I recommend to learn how to test your code especially learn one Framework for testing (Pytest as example). As Data Engineer, I often

- Functional Test

- Logical Test

Version Control Systems

Proficiency in using Git or other version control tools for collaborative development and managing codebases.

As I mention in recent topic, PLEASE use git not only for tracking, collaborating with your team but also for yours progress because It will reflect your working progress; you may surprised when you check the git logs.

Here’s a detailed explanation and Mermaid diagram for Version Control Systems (VCS) and their role in data pipeline development and data versioning.

Version Control Systems (VCS) are tools that help manage changes to source code or data files over time. They allow multiple versions of files to be tracked, changes to be documented, and collaboration among team members to be managed effectively. Popular VCS tools include Git, SVN (Subversion), and Mercurial.

Key Benefits of VCS for Data Pipeline Development

-

Track Changes:

- VCS allows you to track changes made to data processing scripts, configuration files, and other components of a data pipeline.

- Provides a history of changes, making it easier to understand how the pipeline evolved over time.

-

Collaboration:

- Multiple team members can work on the same project simultaneously. Changes can be merged, and conflicts can be resolved systematically.

- Facilitates code reviews and collaboration through pull requests and merge requests.

-

Versioning of Data Processing Scripts:

- Scripts and configuration files used in data pipelines can be versioned, ensuring that different versions of the pipeline can be compared, rolled back, or reproduced.

-

Reproducibility:

- By keeping track of the versions of scripts and configurations, you can ensure that data processing is reproducible. This is crucial for debugging and validating data processing steps.

-

Backup and Recovery:

- Changes are recorded and can be reverted if necessary. This acts as a backup mechanism to recover previous states of the data pipeline in case of errors or unintended changes.

-

Data Versioning:

- VCS can be used in conjunction with data versioning tools to track changes in datasets. This is useful for managing changes in data schema or content over time.

%%{init: {

'theme': 'base',

'themeVariables': {

'primaryColor': '#ffffff',

'primaryTextColor': '#000000',

'primaryBorderColor': '#666666',

'lineColor': '#666666',

'secondaryColor': '#ffffff',

'tertiaryColor': '#ffffff'

}

}}%%

graph TD

%% Add a transparent text node as a watermark

style Watermark fill:none,stroke:none

Watermark[Created by: LongBui]

A["Data Pipeline Development"]

subgraph VCS_Integration

B1["Track Changes"]

B2["Collaborate"]

B3["Version Scripts"]

B4["Backup & Recover"]

end

subgraph Development_Stages

C1["Design Pipeline"]

C2["Write Scripts"]

C3["Test & Deploy"]

end

subgraph VCS_Actions

D1["Commit Changes"]

D2["Push to Repo"]

D3["Pull Updates"]

D4["Merge Changes"]

end

A --> VCS_Integration

VCS_Integration --> Development_Stages

Development_Stages --> VCS_Actions

VCS_Actions --> Development_Stages

VCS_Actions --> A

Software Development Lifecycle (SDLC)

Understanding the software development process, methodologies (Agile, Scrum, Kanban), and best practices for efficient project management.

Agile and Scrum teams helps us to stay tuned with development process and align with enterprise road map, organization vision.

It might be not out concern, with Agile and Scrum we can control and better engineering.

Here’s a Mermaid diagram illustrating the Software Development Lifecycle (SDLC) with Scrum methodology:

%%{init: {

'theme': 'base',

'themeVariables': {

'primaryColor': '#ffffff',

'primaryTextColor': '#000000',

'primaryBorderColor': '#666666',

'lineColor': '#666666',

'secondaryColor': '#ffffff',

'tertiaryColor': '#ffffff'

}

}}%%

graph TD

%% Add a transparent text node as a watermark

style Watermark fill:none,stroke:none

Watermark[Created by: LongBui]

A[SDLC with Scrum]

subgraph Planning

A1[Product Backlog Creation]

A2[Sprint Planning]

end

subgraph Execution

B1[Sprint 1]

B2[Sprint 2]

B3[Sprint 3]

B1 --> B2

B2 --> B3

end

subgraph Monitoring

C1[Daily Standups]

C2[Sprint Review]

C3[Sprint Retrospective]

end

subgraph Delivery

D1[Product Increment]

D2[Release to Production]

end

A --> Planning

Planning --> Execution

Execution --> Monitoring

Monitoring --> Delivery

Delivery --> A

%% Styling

classDef planning fill:#ffccff,stroke:#333,stroke-width:2px;

classDef execution fill:#ccffcc,stroke:#333,stroke-width:2px;

classDef monitoring fill:#ffffcc,stroke:#333,stroke-width:2px;

classDef delivery fill:#ccffff,stroke:#333,stroke-width:2px;

class Planning planning;

class Execution execution;

class Monitoring monitoring;

class Delivery delivery;

-

Planning:

- Product Backlog Creation: Compiling a list of all desired features and requirements for the product.

- Sprint Planning: Selecting backlog items for the upcoming sprint and creating a plan for their delivery.

-

Execution:

- Sprints: Iterative cycles (Sprints 1, 2, 3) where work is done. Each sprint typically lasts 2-4 weeks.

-

Monitoring:

- Daily Standups: Short daily meetings to discuss progress, plans, and obstacles.

- Sprint Review: Meeting at the end of a sprint to demonstrate completed work and gather feedback.

- Sprint Retrospective: Reflecting on the sprint to identify what went well and what could be improved.

-

Delivery:

- Product Increment: The working software product delivered at the end of each sprint.

- Release to Production: Deploying the product increment to the production environment for users.

This diagram provides an overview of how Scrum integrates into the SDLC, highlighting the iterative nature of Scrum and its key activities.

Data Structures and Algorithms

Strong understanding of fundamental data structures (lists, trees, graphs) and algorithms (sorting, searching, optimization).

Fundamentals know how to implement good software are always better than you start from zero. As “we don’t know what we don’t know”, without that knowledge we cannot go faster and more efficient.

Mostly with Data Structures and Algorithms, I often deal with create a data mapping between source and destination, how to convert data and processing data with optimality and optimization.

Ex:

- Get your data pipeline 10x faster by using iterator instead of array.

- Get your data quality much improved 90% by using tuple instead of list.

Getting detail with DSA in Data Engineering

Operating Systems

Understanding the core concepts of operating systems (e.g., process management, memory management, file systems) and practical knowledge of Linux/Unix environments.

As Data Engineer, we are mostly working with Systems / Platform which is a combination of machines, some knowledge about Linux, Unix, Shell, process, Memory, CPU, … OSI would be helpful.

You might asking, “Why Linux?”

You might ask, “Why Linux?” Here are a few reasons why Linux is a preferred choice for data engineering:

- Open Source: Linux is open-source, meaning you can modify and customize the operating system to fit your specific needs without licensing costs.

- Performance and Stability: Linux is known for its high performance and stability, making it ideal for handling large-scale data processing tasks and running complex applications.

- Security: Linux provides advanced security features and configurations, helping protect sensitive data and ensuring compliance with security standards.

- Flexibility: Linux supports a wide range of tools and technologies used in data engineering, such as Hadoop, Spark, and Docker, allowing for a versatile and adaptable data processing environment.

- Community Support: The extensive Linux community provides a wealth of resources, documentation, and support, which can be invaluable for troubleshooting and learning.

For Operating Systems (OS) and their role in data engineering:

Operating Systems (OS) are crucial for managing hardware and software resources on a computer. They provide essential services for data processing, task management, and system operations. In data engineering, the OS plays a key role in handling data storage, execution environments, and resource allocation.

Operating Systems for Data Engineering

Resource Management:

- Manages CPU, memory, and I/O resources for running data processing tasks and applications.

- Ensures efficient allocation and usage of resources across different processes.

File Systems:

- Provides file systems for storing and accessing large volumes of data.

- Supports various file formats and data structures used in data engineering workflows.

Process Management:

- Handles the execution of data processing jobs, batch jobs, and concurrent tasks.

- Manages process scheduling and prioritization.

Security:

- Implements security measures to protect data and access to system resources.

- Supports authentication, authorization, and data encryption.

Networking:

- Facilitates network communication for distributed data processing.

- Manages network interfaces and protocols for data transfer between systems.

Virtualization:

- Enables virtualization of resources, allowing multiple environments (like containers or virtual machines) to run on a single physical machine.

- Supports isolated environments for testing and deploying data engineering applications.

%%{init: {

'theme': 'base',

'themeVariables': {

'primaryColor': '#ffffff',

'primaryTextColor': '#000000',

'primaryBorderColor': '#666666',

'lineColor': '#666666',

'secondaryColor': '#ffffff',

'tertiaryColor': '#ffffff'

}

}}%%

graph TD

%% Add a transparent text node as a watermark

style Watermark fill:none,stroke:none

Watermark[Created by: LongBui]

A["Operating Systems"]

subgraph OS_Components

B1["Resource Management"]

B2["File Systems"]

B3["Process Management"]

B4["Security"]

B5["Networking"]

B6["Virtualization"]

end

A --> B1

A --> B2

A --> B3

A --> B4

A --> B5

A --> B6

B1 --> B3

B2 --> B3

B3 --> B4

B5 --> B6

B6 --> B3

This diagram illustrates the various aspects of operating systems that are crucial for data engineering, showing how they support and manage different components of the data processing workflow.

Computer Networks

Basics of networking protocols (TCP/IP, HTTP, DNS), OSI model, understanding of how data travels across networks, and network security principles.

The Networks is the most complicated in the systems, platform. It is related to everything in the system.

Network Security, Data Security, Platform Security are the most important - aka Dev-Sec-Ops

%%{init: {

'theme': 'base',

'themeVariables': {

'primaryColor': '#ffffff',

'primaryTextColor': '#000000',

'primaryBorderColor': '#666666',

'lineColor': '#666666',

'secondaryColor': '#ffffff',

'tertiaryColor': '#ffffff'

}

}}%%

graph BT

%% Add a transparent text node as a watermark

style Watermark fill:none,stroke:none

Watermark[Created by: LongBui]

%% A["Computer Networks"]

subgraph Networking_Protocols

%% B1["TCP/IP"]

%% B2["HTTP"]

%% B3["DNS"]

end

subgraph OSI_Model

%% C1["Physical Layer"]

%% C2["Data Link Layer"]

%% C3["Network Layer"]

%% C4["Transport Layer"]

%% C5["Session Layer"]

%% C6["Presentation Layer"]

%% C7["Application Layer"]

end

subgraph Network_Security

%% D1["Network Security"]

%% D2["Data Security"]

%% D3["Platform Security"]

end

subgraph Data_Engineering_Impact

E1["Data Transfer & Integration"]

E2["Performance Optimization"]

E3["Security"]

E4["Troubleshooting"]

%% E5["Scalability & Maintenance"]

end

%% A --> Networking_Protocols

%% A --> OSI_Model

%% A --> Network_Security

%% A --> Data_Engineering_Impact

%% Data_Engineering_Impact --> E1

%% Data_Engineering_Impact --> E2

%% Data_Engineering_Impact --> E3

%% Data_Engineering_Impact --> E4

%% Data_Engineering_Impact --> E5

Networking_Protocols --> E1

OSI_Model --> E1

Network_Security --> E3

OSI_Model --> E4

Network_Security --> E2

Software Testing and Data Testing

Understanding testing methodologies, writing test cases, automated testing tools, and ensuring software quality through various testing approaches.

Remember, the tech landscape is constantly evolving, so staying updated with industry trends, new tools, and advancements is equally important for a successful career in software engineering or data engineering.

%%{init: {

'theme': 'base',

'themeVariables': {

'primaryColor': '#ffffff',

'primaryTextColor': '#000000',

'primaryBorderColor': '#666666',

'lineColor': '#666666',

'secondaryColor': '#ffffff',

'tertiaryColor': '#ffffff'

}

}}%%

graph LR

%% Add a transparent text node as a watermark

style Watermark fill:none,stroke:none

Watermark[Created by: LongBui]

A["Data Warehouse Development"]

O["Data Pipeline Development"]

subgraph Pipeline_Testing

B1["Unit Testing"]

B2["Integration Testing"]

B3["System Testing"]

B4["Acceptance Testing"]

B5["Performance Testing"]

end

subgraph Data_Testing

C1["Data Validation"]

C2["Data Accuracy"]

C3["Data Integrity"]

C4["Data Completeness"]

C5["Data Transformation Testing"]

C6["Data Security Testing"]

end

A --> C1

A --> C2

A --> C3

A --> C4

A --> C5

A --> C6

O --> B1

O --> B2

O --> B3

O --> B4

O --> B5

%% O <--> A

Everything have mentioned in the following sub-directory.