Docker and SQL Setup

Notes I used for preparing the videos: link

Preparation

Make sure Docker has installed on your machine.

Create working folder week_1_basic_n_setup

Collect this docker-compose.yaml file

version: '3'

services:

pgdatabase:

image: postgres:13

environment:

POSTGRES_USER: root

POSTGRES_PASSWORD: root

POSTGRES_DB: ny_taxi

volumes:

- "./postgres_data:/var/lib/postgresql/data:rw"

ports:

- "5432:5432"

pgadmin:

image: dpage/pgadmin4

environment:

PGADMIN_DEFAULT_EMAIL: admin@admin.com

PGADMIN_DEFAULT_PASSWORD: root

ports:

- "8080:80"

depends_on:

- pgdatabase

Downloading the data from here https://www.nyc.gov/site/tlc/about/tlc-trip-record-data.page

Download and Open pg-test-connection.ipynb

# %%

import pandas as pd

# %% [markdown]

# ```bash

# pip install sqlalchemy psycopg2-binary

# ```

# %%

from sqlalchemy import create_engine

# %%

engine = create_engine('postgresql://root:root@localhost:5432/ny_taxi')

# %% [markdown]

# If you cannot connect to ny_taxi, use `postgres` database instead

#

# ```python

# engine = create_engine('postgresql://root:root@localhost:5432/postgres')

# ```

# %%

engine.connect()

# %%

query = """

SELECT 1 as number;

"""

pd.read_sql(query, con=engine)

# %%

query = """

SELECT *

FROM pg_catalog.pg_tables

WHERE schemaname != 'pg_catalog' AND

schemaname != 'information_schema';

"""

pd.read_sql(query, con=engine)

# %%

%%bash

ls

# %%

import pandas as pd

# Read the Parquet file

df = pd.read_parquet('../data/yellow_tripdata_2024-01.parquet')

df.head()

# Save as CSV file

df.to_csv('../data/yellow_tripdata_2024-01.csv', index=False)

# %%

df = pd.read_csv('../data/yellow_tripdata_2024-01.csv', nrows=100)

# %%

df.tpep_pickup_datetime = pd.to_datetime(df.tpep_pickup_datetime)

df.tpep_dropoff_datetime = pd.to_datetime(df.tpep_dropoff_datetime)

# %%

df.to_sql(name='yellow_taxi_trips', con=engine, index=False)

# %%

query = """

SELECT * FROM yellow_taxi_trips LIMIT 10

"""

pd.read_sql(query, con=engine)

# %% [markdown]

# ```sql

# SELECT *

# FROM pg_catalog.pg_tables

# WHERE schemaname != 'pg_catalog' AND

# schemaname != 'information_schema';

# ```

#

# Source: https://www.postgresqltutorial.com/postgresql-show-tables/

Running Postgres with Docker

Access Docker

Pulling and Running Postgres Container

Bash Command for starting docker“

docker run -it \

-e POSTGRES_USER="root" \

-e POSTGRES_PASSWORD="root" \

-e POSTGRES_DB="ny_taxi" \

-v /Users/longbuivan/Documents/Projects/de-handbook/docs/datacamping/week_1_basics_and_infrastructure/2_docker_sql/postgres_data:/var/lib/postgresql/data \

-p 5432:5432 \

postgres:13

Issue If you have the following errors:

docker: Error response from daemon: invalid mode: \Program Files\Git\var\lib\postgresql\data.

See 'docker run --help'.

Solution Change the mounting path. Replace it with the following:

-p /datacamping/...:/var/lib/postgresql/data

Issue If you see that postgres_data is empty after running

the container, try these:

Solution

- Deleting the folder and running Docker again (Docker will re-create the folder)

- Adjust the permissions of the folder by running

sudo chmod a+rwx postgres_data

Prepare Data

Download NY Trips Dataset:

- https://www1.nyc.gov/site/tlc/about/tlc-trip-record-data.page

- https://www1.nyc.gov/assets/tlc/downloads/pdf/data_dictionary_trip_records_yellow.pdf

Note: For changing to from parquet format to csv, use sample pandas function:

import pandas as pd

# Read the Parquet file

df = pd.read_parquet('../data/yellow_tripdata_2024-01.parquet.parquet')

df.head()

# Save as CSV file

df.to_csv('../data/yellow_tripdata_2024-01.parquet.csv', index=False)

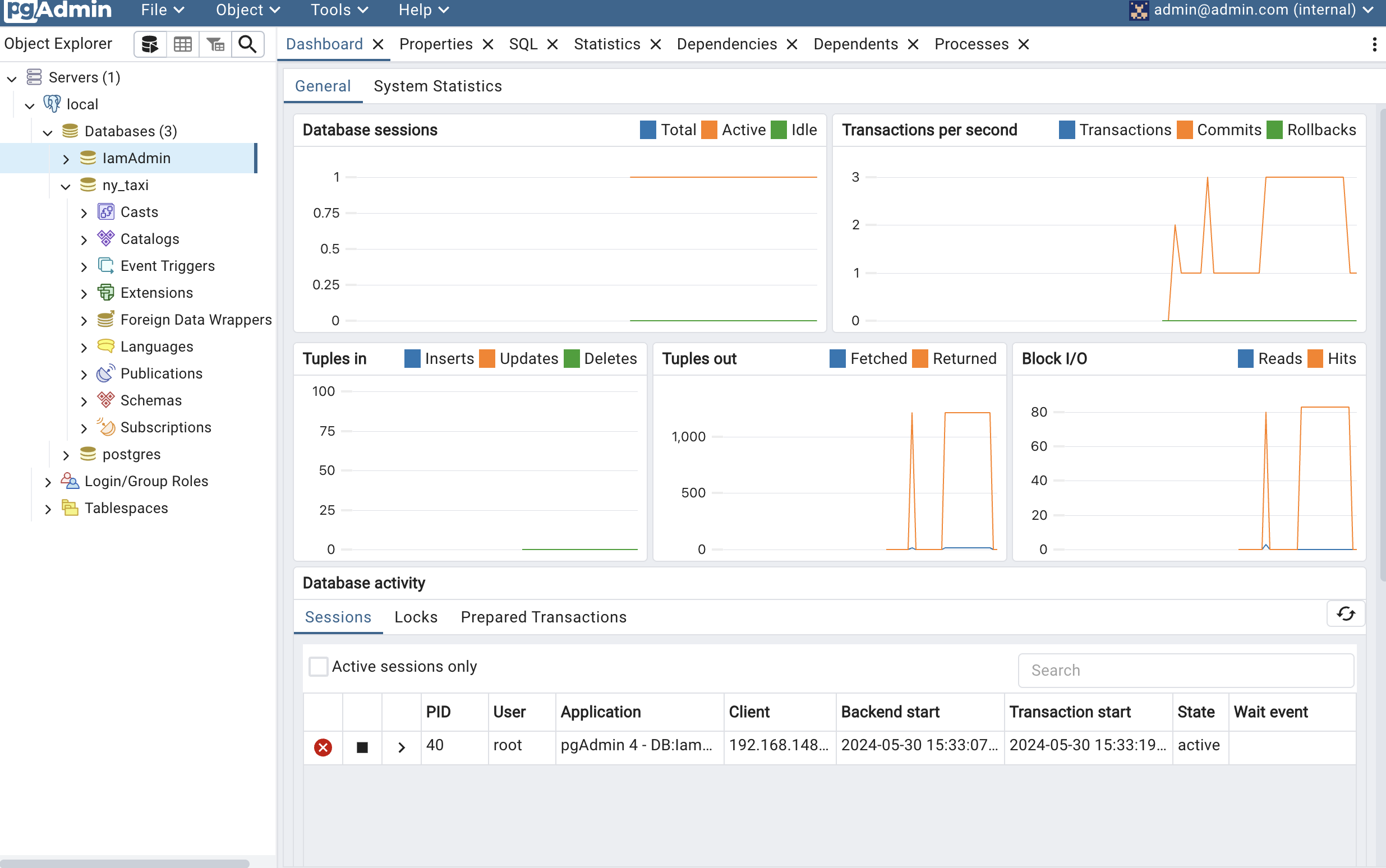

pgAdmin

Running pgAdmin

docker run -it \

-e PGADMIN_DEFAULT_EMAIL="admin@admin.com" \

-e PGADMIN_DEFAULT_PASSWORD="root" \

-p 8080:80 \

dpage/pgadmin4

Test Connection

- Open pg-test-connection.ipynb

- Run All Code Block and make sure mo error occurs

Ingest Data

- Running locally

Download this load_data_2_postgres.py file and run the command with following arguments

#!/usr/bin/env python

# coding: utf-8

import os

import argparse

from time import time

import pandas as pd

from sqlalchemy import create_engine

def load_data_to_postgres(params):

# Connect to PostgreSQL database

local = params.local

user = params.user

password = params.password

host = params.host

port = params.port

db = params.db

table_name = params.table_name

csv_name = params.csv_file

if local == 'True':

os.system(f"wget {csv_name} -O {csv_name}")

engine = create_engine(f'postgresql://{user}:{password}@{host}:{port}/{db}')

# Load data from Parquet file in chunks

df_iter = pd.read_csv(csv_name, iterator=True, chunksize=100000, index_col=False)

# Process each chunk

for df in df_iter:

t_start = time()

# Convert columns to datetime

df.tpep_pickup_datetime = pd.to_datetime(df.tpep_pickup_datetime)

df.tpep_dropoff_datetime = pd.to_datetime(df.tpep_dropoff_datetime)

# Load chunk into PostgreSQL database

df.to_sql(name=table_name, con=engine, if_exists='append', index=False) # Exclude the "index" column

t_end = time()

print('inserted another chunk, took %.3f second' % (t_end - t_start))

engine.dispose()

if __name__ == '__main__':

parser = argparse.ArgumentParser(description='Ingest CSV data to Postgres')

parser.add_argument('--local', required=False, help='Check if running locally', default='False')

parser.add_argument('--user', required=True, help='user name for postgres')

parser.add_argument('--password', required=True, help='password for postgres')

parser.add_argument('--host', required=True, help='host for postgres')

parser.add_argument('--port', required=True, help='port for postgres')

parser.add_argument('--db', required=True, help='database name for postgres')

parser.add_argument('--table_name', required=True, help='name of the table where we will write the results to')

parser.add_argument('--csv_file', required=True, help='csv_file of the csv file')

args = parser.parse_args()

load_data_to_postgres(args)

To understand the arguments:

if __name__ == '__main__':

parser = argparse.ArgumentParser(description='Ingest CSV data to Postgres')

parser.add_argument('--local', required=False, help='Check if running locally', default='False')

parser.add_argument('--user', required=True, help='user name for postgres')

parser.add_argument('--password', required=True, help='password for postgres')

parser.add_argument('--host', required=True, help='host for postgres')

parser.add_argument('--port', required=True, help='port for postgres')

parser.add_argument('--db', required=True, help='database name for postgres')

parser.add_argument('--table_name', required=True, help='name of the table where we will write the results to')

parser.add_argument('--csv_file', required=True, help='csv_file of the csv file')

args = parser.parse_args()

load_data_to_postgres(args) # Function to load data from local2postgres

Execute Python function with arguments“

python3 load_data_2_postgres.py \

--user=root \

--password=root \

--host=localhost \

--port=5432 \

--db=ny_taxi \

--table_name=yellow_taxi_trips \

--csv_file=${URL}

Result: Data inserted to Table

inserted another chunk, took 6.863 second

inserted another chunk, took 6.878 second

inserted another chunk, took 6.534 second

inserted another chunk, took 6.806 second

inserted another chunk, took 6.670 second

inserted another chunk, took 6.743 second

- Build the image, Download the Dockerfile

docker build -t ny_taxi_ingest:v001 .

- Run the script with Docker

docker run -it \

ny_taxi_ingest:v001 \

--user=root \

--password=root \

--host=modest_panini.orb.local \

--port=5432 \

--db=ny_taxi \

--table_name=yellow_taxi_trips \

--csv_file=${URL}

Result: Data insert to table

inserted another chunk, took 8.036 second

inserted another chunk, took 7.992 second

inserted another chunk, took 8.042 second

Run Postgres and pgAdmin together

Using Docker-Compose file you have download above and run

docker-compose up

# Using `docker-compose up -d` if you don't want to trailing logs...

Seeing and access the Postgres and PgAdmin via:

- Postgres: locahost:5432 (not UI access)

- PgAdmin: localhost:8080 (UI)

Run it:

docker-compose up

Run in detached mode:

docker-compose up -d

Shutting it down:

docker-compose down

Note: to make pgAdmin configuration persistent, create a folder data_pgadmin. Change its permission via

sudo chown 5050:5050 data_pgadmin

and mount it to the /var/lib/pgadmin folder:

services:

pgadmin:

image: dpage/pgadmin4

volumes:

- ./data_pgadmin:/var/lib/pgadmin

...

Create analysis SQL

-- Query to get the total number of trips

SELECT COUNT(*) AS total_trips

FROM yellow_taxi_trips;

-- Query to get the average trip distance

SELECT AVG(trip_distance) AS avg_distance

FROM yellow_taxi_trips;

-- Query to get the maximum fare amount

SELECT MAX(fare_amount) AS max_fare

FROM yellow_taxi_trips;

-- Query to get the total revenue

SELECT SUM(fare_amount) AS total_revenue

FROM yellow_taxi_trips;

Tears down

Keep it run until you finish the homework, to shut down everything, use

docker stop $(docker ps -aq)

Here, docker ps -aq lists all running container IDs and docker stop stops each container.

Screenshots

Additional Scripts

-- Database: ny_taxi

-- DROP DATABASE IF EXISTS ny_taxi;

CREATE DATABASE ny_taxi

WITH

OWNER = root

ENCODING = 'UTF8'

LC_COLLATE = 'en_US.utf8'

LC_CTYPE = 'en_US.utf8'

TABLESPACE = pg_default

CONNECTION LIMIT = -1

IS_TEMPLATE = False;