Airflow Installation for Data Engineer (Part 2)

Setup (No-frills)

Airflow Setup

-

Create a new sub-directory called

airflowin yourprojectdir (such as the one we’re currently in) -

Set the Airflow user:

On Linux, the quick-start needs to know your host user-id and needs to have group id set to 0.

Otherwise the files created in dags, logs and plugins will be created with root user.

You have to make sure to configure them for the docker-compose:

mkdir -p ./dags ./logs ./plugins

echo -e "AIRFLOW_UID=$(id -u)" >> .env

On Windows you will probably also need it. If you use MINGW/GitBash, execute the same command.

To get rid of the warning (“AIRFLOW_UID is not set”), you can create .env file with

this content:

AIRFLOW_UID=50000

- Docker Build:

When you want to run Airflow locally, you might want to use an extended image, containing some additional dependencies - for example you might add new python packages, or upgrade airflow providers to a later version.

Create a Dockerfile pointing to the latest Airflow version such as apache/airflow:2.2.3, for the base image,

And customize this Dockerfile by:

- Also, integrating

requirements.txtto install libraries viapip install

- Copy docker-compose-nofrills.yaml, .env_example & entrypoint.sh from this repo.

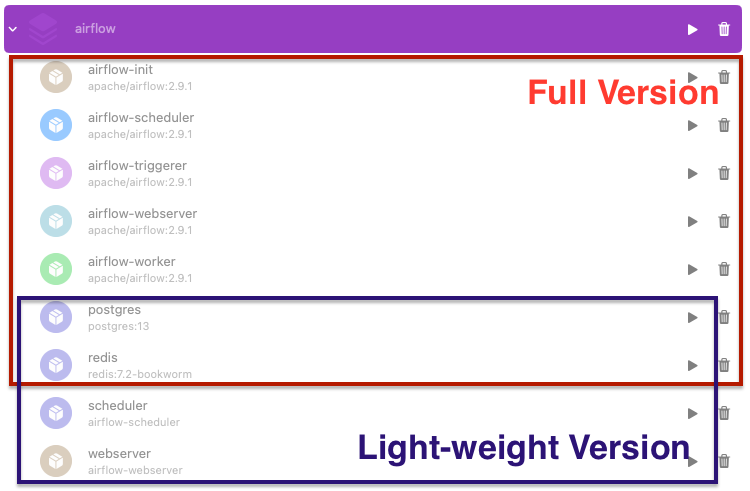

The changes from the official setup are:

- Removal of

redisqueue,worker,triggerer,flower&airflow-initservices, and changing fromCeleryExecutor(multi-node) mode toLocalExecutor(single-node) mode - Inclusion of

.envfor better parametrization & flexibility - Inclusion of simple

entrypoint.shto thewebservercontainer, responsible to initialize the database and create login-user (admin). - Updated

Dockerfileto grant permissions on executingscripts/entrypoint.sh

.env:

- Rebuild your

.envfile by adding the following items to the file (but make sure yourAIRFLOW_UIDremains):

??? tip “Configure .env”

```shell title="Environment Config"

POSTGRES_USER=airflow

POSTGRES_PASSWORD=airflow

POSTGRES_DB=airflow

AIRFLOW__CORE__EXECUTOR=LocalExecutor

AIRFLOW__SCHEDULER__SCHEDULER_HEARTBEAT_SEC=10

AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://${POSTGRES_USER}:${POSTGRES_PASSWORD}@postgres:5432/${POSTGRES_DB}

AIRFLOW_CONN_METADATA_DB=postgres+psycopg2://airflow:airflow@postgres:5432/airflow

AIRFLOW_VAR__METADATA_DB_SCHEMA=airflow

AIRFLOW__CORE__DAGS_ARE_PAUSED_AT_CREATION=True

AIRFLOW__CORE__LOAD_EXAMPLES=False

```

Here’s how the final versions of your Dockerfile, docker-compose-nofrills and entrypoint should look.

Note: Move entrypoint.sh to script folder, by command:

mv {URL}/entrypoint.sh /scripts/entrypoint.sh

Execution

By following the original setup, you can trigger and create you own job.

Execute Pulling and Starting Containers

docker-compose -f docker-compose-nofrills.yml up -d

After Launch Airflow-nofrill, you will see it ==> “Only Fundamental Installed”

[+] Running 3/3

✔ Container airflow-postgres-1 Started 1.2s

✔ Container airflow-scheduler-1 Started 1.6s

✔ Container airflow-webserver-1 Started

List all Docker container docker-compose ps

Problems

Submit the issue to channel or Airflow Github if relevant.

You can get the custom docker-compose-nofrill here

Base Image

FROM apache/airflow:2.9.1

ENV AIRFLOW_HOME=/opt/airflow

USER root

RUN apt-get update -qq && apt-get install vim -qqq unzip -qqq

# git gcc g++ -qqq

COPY requirements.txt .

USER airflow

RUN pip install --no-cache-dir -r requirements.txt

# Ref: https://airflow.apache.org/docs/docker-stack/recipes.html

SHELL ["/bin/bash", "-o", "pipefail", "-e", "-u", "-x", "-c"]

USER root

WORKDIR $AIRFLOW_HOME

COPY scripts scripts

RUN chmod +x scripts

USER $AIRFLOW_UID

Docker Compose

version: '3'

services:

postgres:

image: postgres:13

env_file:

- .env

volumes:

- postgres-db-volume:/var/lib/postgresql/data

healthcheck:

test: ["CMD", "pg_isready", "-U", "airflow"]

interval: 5s

retries: 5

restart: always

scheduler:

build: .

command: scheduler

restart: on-failure

depends_on:

- postgres

env_file:

- .env

volumes:

- ./dags:/opt/airflow/dags

- ./logs:/opt/airflow/logs

- ./plugins:/opt/airflow/plugins

- ./scripts:/opt/airflow/scripts

webserver:

build: .

entrypoint: ./scripts/entrypoint.sh

restart: on-failure

depends_on:

- postgres

- scheduler

env_file:

- .env

volumes:

- ./dags:/opt/airflow/dags

- ./logs:/opt/airflow/logs

- ./plugins:/opt/airflow/plugins

- ./scripts:/opt/airflow/scripts

user: "${AIRFLOW_UID:-50000}:0"

ports:

- "8080:8080"

healthcheck:

test: [ "CMD-SHELL", "[ -f /home/airflow/airflow-webserver.pid ]" ]

interval: 30s

timeout: 30s

retries: 3

volumes:

postgres-db-volume:

Endpoint

To execute the airflow command

#!/usr/bin/env bash

airflow db upgrade

airflow db init

airflow users create -r Admin -u admin -p admin -e admin@example.com -f admin -l airflow

# "$_AIRFLOW_WWW_USER_USERNAME" -p "$_AIRFLOW_WWW_USER_PASSWORD"

airflow webserver